Goodbye HTTP: Understanding Old & New Protocols

Hypertext Transfer Protocol (HTTP) is starting to show its age. In fact, the most commonly used version of the protocol, HTTP 1.1, is nearly 20 years old. HTTP was developed in 1989 and ratified in 1997, but its story actually started long before that. Just think, when it’s last iteration was made floppy discs were the go-to storage method and Java was brand new. So there’s no doubt that there is room for an upgrade, but it’s not as easy as it seems.

A Brief History

It all started with the Advanced Research Projects Agency Network (ARPANET). This concept which was first published in 1967 was actually the basis for what was one day going to become the internet. Then, when electronic mail was invented, ARPANET gave us what we now know as email addresses with the “@” symbol to distinguish between mail addresses with hostnames.

Email could pass through networks, such as ARPANET, or use UNIX-to-UNIX Copy Protocol (UUCP) to connect directly to the host’s site. While UUCP was commonly used to distribute files, users started to use File Transfer Protocol (FTP) email gateways to download files. When HTTP was standardized, tools such as UUCP became obsolete.

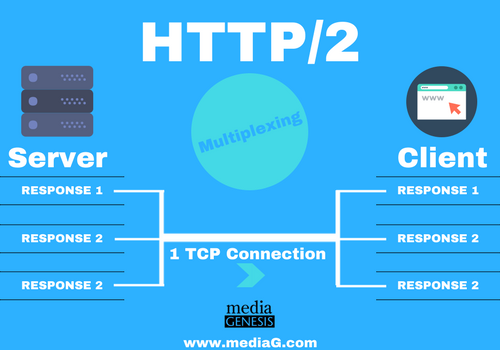

During this period, HTTP was extremely effective. However, with the vast amount of information available today, this is not always the best scenario. A key performance problem with HTTP 1.1 is the time it takes to make a request and receive a response. This issue has become more pronounced as the number of images and amount of JavaScript and CSS on a typical Web page continue to increase. Every time an asset is fetched, a new Transmission Control Protocol (TCP) connection is generally needed. However, the number of simultaneous open TCP connections per host is limited by browsers and there’s a performance penalty incurred when establishing new connections. The physical locations of the user versus the web server are also important and if they are far enough away from each other, performance time is also increased.

In Comes HTTP/2

HTTP/2 was created to address some significant performance problems with HTTP 1.1. The Internet Engineering Task Force (IETF) developed HTTP/2 through the HTTP Working Group (HTTPWG). It’s assumed that the World Wide Web Consortium (W3C) has contributed to HTTP/2, but officially it’s simply a HTTPWG project. The majority of HTTP/2 has been developed through Microsoft, Firefox, and Google Chrome engineers.

Adoption of HTTP/2 has increased in the past year as browsers, web servers, and more have committed to supporting the change. Unfortunately for programmers, transitioning to HTTP/2 isn’t always easy and a speed boost isn’t guaranteed. The new protocol challenges some common wisdom when building web apps and many existing tools—such as debugging proxies—don’t support it yet.

What are the benefits of HTTP/2?

The new HTTP/2 protocol will eliminate the single outstanding request per TCP, and improve it through a multiplex. The protocol will automatically compress header data to reduce unnecessary overhead. Furthermore, responses can be proactively pushed from the server to a client’s cache to:

- Eliminate block data requests “head-of-line-blocking”

- Eliminate browser parsing by pushing to the clients cache

- Eliminate multiple TCP trips, and improve performance

What browsers support HTTP/2?

The top four browsers all support HTTP/2, including Google Chrome, Firefox, Safari, and Edge. Testing HTTP/2 on Chrome, HTTP/1 was averaging 3.55 seconds and HTTP/2 averaged 0.76 seconds; that is a 2.79 second difference. Now think about this difference across multiple requests and websites.

HTTP/2 will also work with non-browsers. For example, programs using HTTP Application Programming Interface (API’s) will be able to use HTTP/2.

And while the HTTP/2 protocol wasn’t intended to implement Transport Layer Security (TLS) as a requirement, no browser supports the HTTP/2 unencrypted. This means that the HTTP/2 must use a Transport Layer Security (TLS) at the moment.

Other Protocols

With so many protocols it may seem a bit daunting. Every protocol has jobs, rules, and security measures. Some standard protocols include:

- Secure Shell (SSH) – Secure login to remote computers.

- File Transfer Protocol (FTP) – Transfer files remotely between servers and computers.

- Secure File Transfer Protocol (SFTP) – An extension of SSH commonly used over TLS, and through Virtual Private Network (VPN) applications.

- Transmission Control Protocol (TCP) – Main protocol used for Internet Protocol (IP); called TCP/IP and a connection of applications running on hosts, which communicate with IP networks.

- Transport Layer Security (TLS) referred as Secure Socket Layer (SSL) – Secure connection over computer network, commonly used to secure communication between servers and applications.

- Hypertext Transfer Protocol (HTTP) – Communication of data, commonly with web browsers. A secure version is called HTTP Secure (HTTPS), which is commonly over TLS or SSL.

In 1982, TCP/IP became the standard network protocol on ARPANET. 35 years later, TCP/IP is used among billions of people and devices. As time goes on, we’ll need more resources and improved protocols for the resource intensive web. Several protocols over time will become decommissioned, and others will take their place.

Do you need help with online protocols? We host email accounts, as well as, public, and private websites. Our team is here to assist with anything from VPNs to basic or advanced websites and applications. Call us at 248-687-7888 or email us at inquiry@mediaG.com today to find out how we can help your business thrive in the digital world.

.png)